As the module “individual profiling” in university, I created reinforcement learning agent working only on visual inputs, which could generally control anything on the computer. It was mainly built to play a certain video game, but can (in theory) generalize to do anything with visual input. It just needs an interface class to be written which converts the outputs to the desired thing to do.

For more information, visit the GitHub repo of the project (there is also in-depth documentation of the creation of the project).

Here are the two main examples used to show the best progress in two different games:

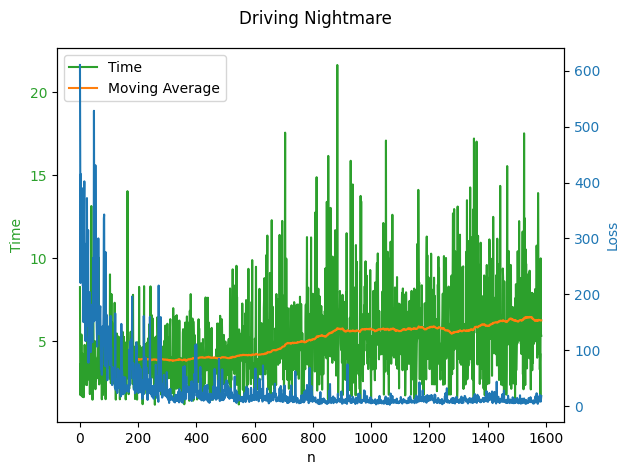

1. Driving nightmare (a game jam game created by a team of three people including me)

This diagram shows the learning progress over 1600 iterations. The green line representing how long each run was (the higher the better) with the yellow line showing the average. The “loss” in blue being how far off the model thinks it is from the expected result.

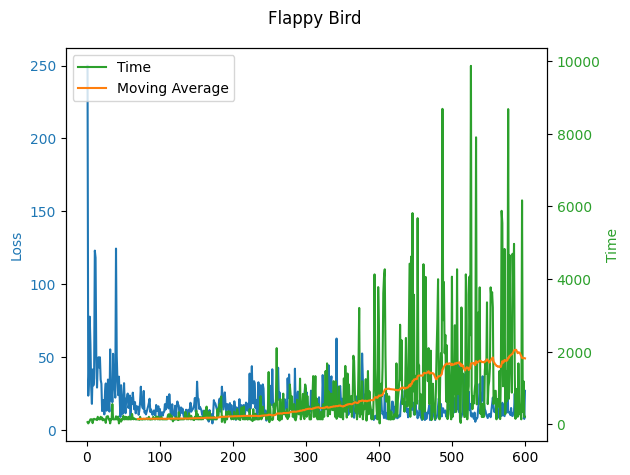

2. A simple Flappy bird like program built specifically for the AI. The flappy bird game can be advanced by code in specific steps, so it can wait for a slower working network without dropping any inputs.